It’s not been a good month for hyperscalers, or beds!

With two recent cloud outages, Microsoft’s Front Door outage globally that impacted not just customer services but Microsoft themselves, and this week’s AWS outage in US East 1, where a simple DNS issue on one service cascaded to take down huge numbers of services in a region even if not directly related, including most critically my own bed, I was thinking a bit…

Yes, I’m one of those with the now somewhat famous smart mattress topper that has made itself stupidly cloud centric. I don’t even have the ‘AI powered insights’ subscription, I just use it in dumb mode where I just have to turn it on at night, set the temp, and turn it off in the morning. I can’t even set a timer without the cloud! But I couldn’t turn it off the other morning unless I just pulled the plug…

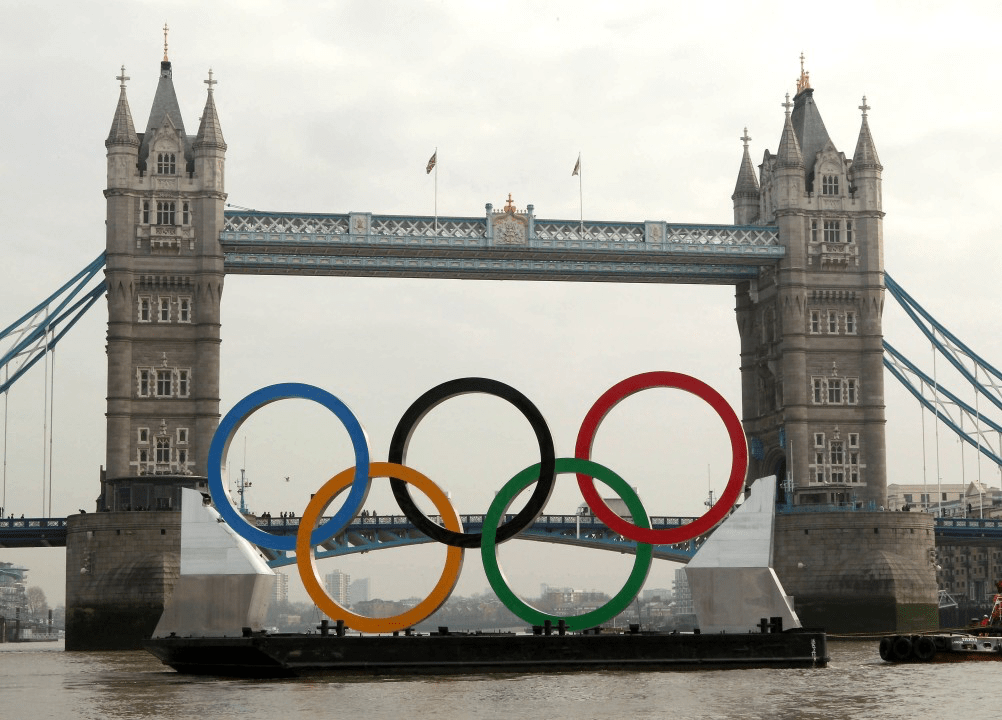

When designing highly resilient services, looking at potential points of failure and understanding their impact is crucial. I’ve spoken in the past about how on the Olympics we worked to a 5x 9s SLA, and built in layers and layers of redundancy, removing single points of failure, and at every level ensuring there were layers of contingency, and then testing every single one of them – repeatedly. The same should apply in designing your IT services, and the scale of that effort depends on the criticality of your solutions, and of course the impact to your brand.

From multi- AZ approaches, multi-region, to multi-cloud there are levels of resilience that can be built in to cloud environments to mitigate the risks of outages, and of course let’s not forget your own data centres as well – hybrid approaches can also help in such scenarios, both with distributed critical systems where the cloud is used for scalability but the core can remain on prem for example (of course outages in your own data centre are also a thing, not just the realm of hyperscalers).

The Front Door outage the other week – that impacted globally, so no matter how well engineered your solution was, it was going down if you relied on Front Door. But a multi-CDN approach, where Application Gateways are fronted by multiple CDNs would have remained up. Increased cost and complexity, vs higher SLA.

The Amazon Outage, which also impacted the AWS control plane, perhaps made it hard for people to be able to understand the full impact, and decide if a DR would be necessary or not (or indeed even if they were able to). For clients in active multi-region set ups, certainly it didn’t work as planned, for some of them at least!

But for my bed? Is it a mission critical system? Definitely not (well… perspective is everything!). But, one would argue the brand damage here could be quite major (Saying that, a lot of people are talking about it, and perhaps are curious…). However, from a design perspective, I think the solution they have done simply makes no sense whatsoever – the unit in my bedroom has by all accounts a quad core ARM processor… yet all it does is connect back to AWS USE1 for instructions. Indeed users who do have the subscription, it apparently sends a full 16GB of data a day!! (Anyone in the Edge AI/ML world, when all it’s doing is collating sample points from sensors, would perhaps find this quite startling that there is zero logic being applied at the edge here).

I can see no good reason that there can’t be certain functions that just are processed locally, like turning it on or off – sure I can turn it on when I’m the other side of the world too… but not sure that’s useful! Limiting functions like a timer is a business decision, but again technically also odd! Not only do these decisions impact costs for the company – they are processing lots more data than they need to in the cloud when edge processing could do plenty of that lifting, we’ve now seen the impact of an outage on an architecture that, perhaps rightly so, is not highly redundant and multi-region active active.

So… my point? When designing a cloud service, you need to think hard about ‘what would happen if…’ and balance the risks and costs against the very rare chance of a major outage. Yes it’s been a bad month, they are very rare, but they do happen. And being prepared for when they do is crucial, and needs to start with a fundamentally understanding of your business, its application and users, and the impact on them. A distributed cloud solution can keep you edge working in an outage (and optimise data flows with edge computer!), a resilient hybrid/multi cloud solution can reduce the impact on your critical services, and it might not be as hard to achieve as you think!